Introduction to Parallel and Cloud Programming

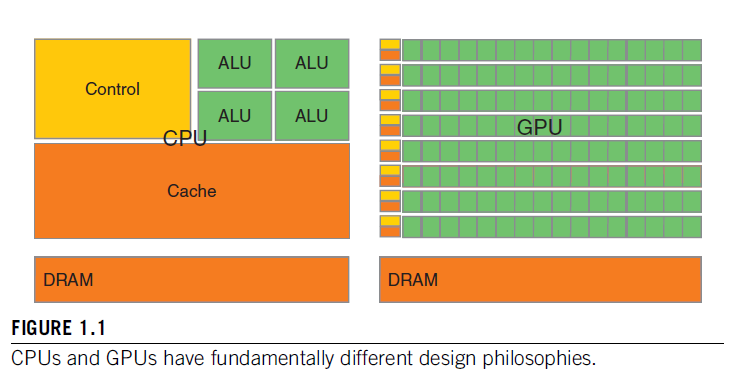

I am excited to get you started on one of the most important pathways to emerge in technology Parallel and Cloud Computing. Never before have such powerful tools been so widely available. It is enabling new forms of computation and new designs for algorithms. Above is a diagram illustrating the large increase in computational resources available for our use. The green boxes represent registers inside a computing device. On the left a traditional Central Processing Unit with just 4 Arithmetic Logic Units (ALU), on the right one Streaming Multiprocessor (SM). This is one of many coordinated computing elements found in in a Graphics Processing Unit (GPU); The entire unit typically contains many modules similar to the one show below with 128 ALU. This results in thousands of ALU at your disposal for use in programming. How do we use them all? That is the subject of study in this course.

New Computing Paradigms

Instruction in most modern programming courses teaches you how to write and debug a program that executes steps of an algorithm sequentially using a single thread of control. This form of programming leaves most of the resources in a Central Processing Unit (CPU) idle and underutilized. The rapid growth of data and the many ways in which we wish to view and utilize information in a limited amount of time makes this approach inadequate. The new computing paradigm requires that we analyze a problem to see which parts can be solved independently, and then dispatch several CPUs in a coordinated fashion to complete a solution in less time than one CPU working alone. Enabling a student to acquire and demonstrate these analytical and implementation skills is the goal of "Introduction to Parallel and Cloud Programming."

Upgrade Your Computing Resources

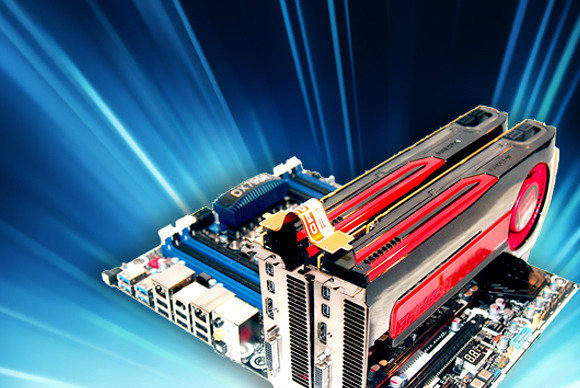

Graphics Processing Units (GPU) represent an epic shift in programmable computing resources. They represent a new way to solve problems that were once to hard or resource intensive. Problems like Neural Networks and Data Science are now within reach due to the massive computing power that is now available.

Getting Started - Basic Skills

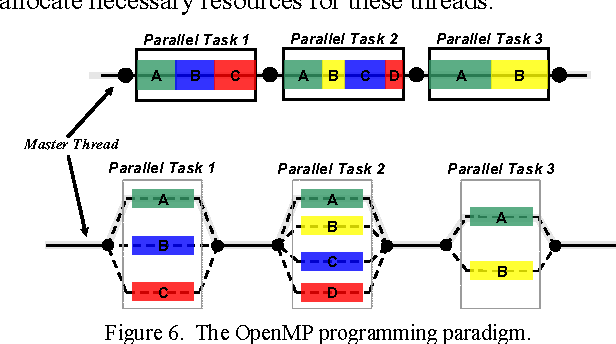

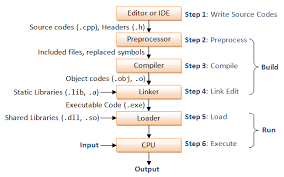

To begin this course, you need to be able to design an algorithm and implement it in an object oriented language, preferably C++, for execution in a serial, single threaded process. This is the foundation skill you should have or acquire during the orientation. You will re-write this core program to leverage different forms of parallel programming. This carefully scopes the new skills being acquired so your efforts are focused on learning different ways to increase the throughput of a program. Some modes explored include running several copies of the same program concurrently, running several threads within the same program, and running parts of the program on a GPU while other parts execute on a CPU. You will then be able to adapt other programs to use different forms of parallel execution and coordinated hardware resources. The program that you first write to execute in serial method will be used to verify that you parallel solutions produce correct results and estimate the resulting speed-up (or slow-down!) of the parallel implementation.

What You Can Learn

Provisioning and Use of Cloud Computers

In this course you learn how to use an Amazon Web Services (AWS) Cloud computer equipped with a GPU to write and run programs. If you have a computer with a suitable GPU there are tips to help you configure your computer for local execution of GPU programs. You can also learn the basics of programming inOpenCL, MPI, CUDA, use of classic concurrent programming, and the use of object oriented programming to hide complexity of parallel programming Application Programming Interfaces (API).

XLabs - Extra Credit for Setting Up Your Own System

Embedded within the course are XLabs - projects you can complete as you go along that help you set up your GPU equipped system for parallel programming. You can earn extra credit towards your final grade by submitting screen shots and other evidence that you are building-your-own local parallel programming workstation. The selection and configuration of a complete toolchain; hardware, drivers, libraries, and frameworks is a skill set many systems administratiors normally learn on their own after much trial and error. Even though each student's computer is different, by working through the XLabs you have a well curated set of instructions that provide more accurate and up-to-date information than you can discover on your own.

If you have the good fortune to get a new system that you can use for programming, look at the list below to help specify components that will meet your needs.

System76 is a vendor that lets you customize systems for GPU Computation

A list of Consumer grade NVIDIA GPU components can be found in the GeForce section. If you pick a system with one of these GPUs your workstation should run CUDA with no problem.

A list of AMD GPU and intel GPU that support Compute operations are found on the OpenCL site. If you have a system with one of these you can run OpenCL, SYCL, ComputeCPP, AMD ACC codes.

System76 also provides an Ubuntu based Linux distribution that has integrated GPU compute drivers for either NVIDIA or AMD

A general GPU computing Wiki is managed by BOINC

Terms of Art - Domain-Specific Phrases Used by Professionals

In a broad and rapidly changing field like parallel and cloud computing, you must use specific words and phrases that carry an unambiguous meaning if you want to be part of a team. Terms of Art let others understand your intended behavior and use case so that they can ensure their modules work with yours; scan, reduce, barrier, semaphore, critical section, mutex are just a tip of the iceberg. If others must struggle to understand what you mean you will be quickly removed from the project (fired) because you are slowing down your team members individually, and team progress over all. The time to master these terms of art is BEFORE you are engaged as a contributor to a High Performance Computing (HPC) project.